What if I were to tell you that the e-commerce site you’ve been developing could be hosted on a static website that would offer it premier performance? Or that the document management system could be run on any plain, old static server like Apache, nginx?

Stick with me and we’ll explore this topic. This information may be too basic for advanced developers, but for most managers, this introduction may be long overdue.

A bit of history

Long ago, most websites were static. All they served were pages – html, text, and images. Hyperlinks allowed us to navigate from one page to another. Most were content-rich websites containing information, entertainment, and photos. Now it seems unthinkable that clicking on the hyperlinks was the only way to interact with the sites.

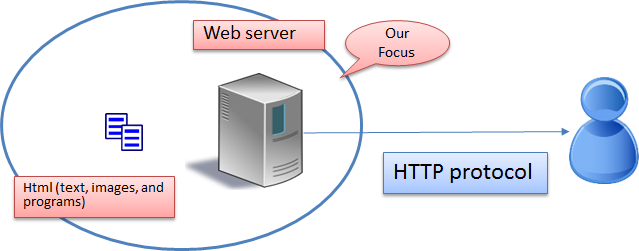

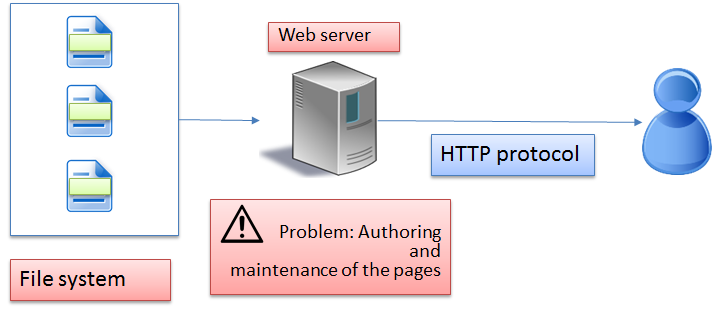

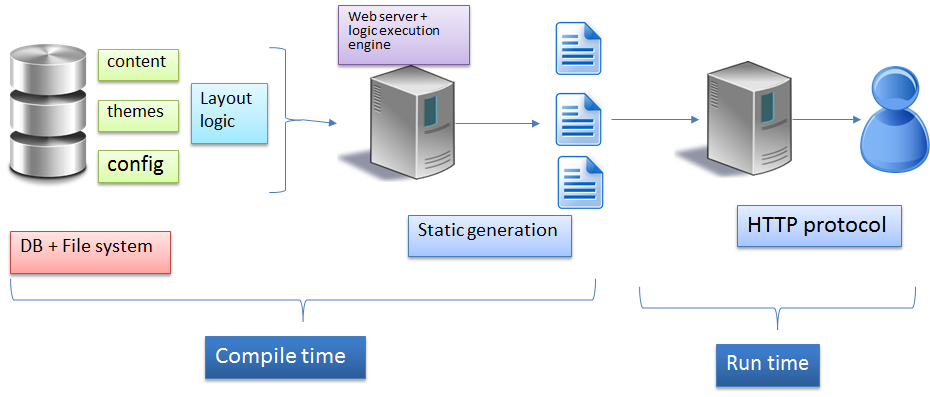

As shown in the picture, the protocol between the web server and the user is fixed: http protocol. The way the webserver interacts with backend information (in the case of static web, it is html, css, js, images and such) is up to us. A static website serves this information straight out of the file system.

As the readers began wanting to navigate sites in interesting ways or interact by adding content with the websites (like comments), we began to need server side programming. Serving out of a file system won’t do. The application needs to understand complex requests and somehow use the information in the files (or databases) to serve the information. Since database is a popular choice to keep structured information, web started serving as interfaces to the database – types of simple crud operations. In fact, applications like phpMyAdmin even let people manage the databases on the web.

Coming back to the web applications I mentioned, -- e-commerce, digital marketing, document management systems -- all these websites are considered interactive, dynamic, and programmable, and therefore need a web application server. The moment you choose one platform (typically it means an app server, a language, a framework, a set of libraries), you’re stuck with it forever.

Let’s see how we can design our way out.

Static web with automated look and feel support

Let us consider a simple case of information presentation. In this setup, all the consumers want to do is read the information. In that case, why not stick to a static website?

We can. The advantages are obvious. First and foremost, a static website is

simple. It does not require any moving parts. Any old webserver can serve

static content. You can even run a static website, with a simple one-liner in

Python (python –mSimpleHTTPServer in Python 2.7 or python3 –m http.server).

It is also incredibly fast. The server has to simply send pages – it does not have to do any other computation. As such, it can be sped up even more by caching the content locally, or even using CDN (content delivery networks). You can use special purpose webservers such as nginx, that are optimized for serving static content too.

Furthermore, it is simple to maintain. You can back it up in the cloud, move to a different provider, and even serve it from your own desktop. You do not need to back up a database or worry about software upgrades, portability issues, or even support.

But, what are the downsides? Let us consider the problems:

-

How do you create nice looking pages? Let us say that you want to create a standard look and feel. If you are the kind that use hand-coded HTML, you will cut and paste the HTML template and then edit it. Pretty soon, it becomes complex. If you want to change the look and feel, you will have to go through all the pages and edit the few lines.

-

How do you create standard elements of a website? For example, you want a menu scheme. If you add a new area, then you have to go to each page and add a link in the menu to this new area of website. Or, if you want to create a sitemap, you will find yourself constantly updating the sitemap.

Considering that a website has repetitive information depending on the context, static way of maintaining a website is cumbersome. That is why, even for the static websites, people prefer WordPress, Joomla, or Drupal – all of which are meant for dynamically creating websites.

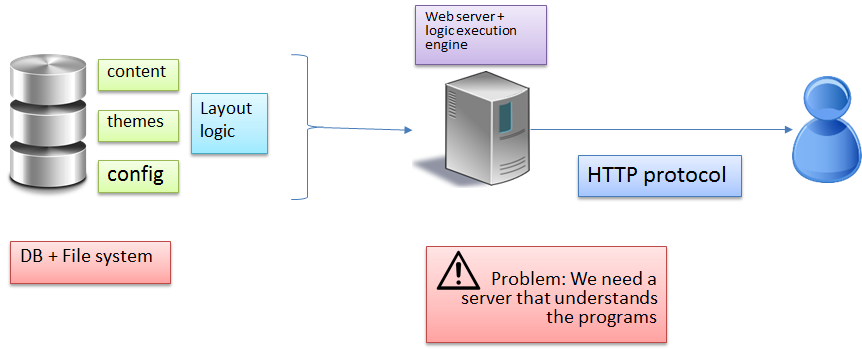

In these systems of dynamic content generation, the content is generated based on the request. No matter what application you use, it will have following issues:

- The application becomes slow: The server needs to execute the program to generate a page and serve the page. Compare it to the earlier scenario where the server merely had to read the file from the file system and serve! In fact, the server can even cache it, if the file doesn’t change often.

- The application becomes complex: All the flexibility of combining content with the themes based on configuration comes at a price. The application server has to do many things.

Consider the following full description of the stack.

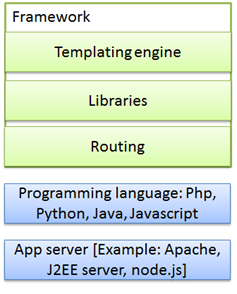

Any web server that is generating pages

dynamically, needs to depend on an execution engine. It can be an engine that

interprets a language, or executes a binary. For any language, we need a

framework. For instance, if we are using Javascript, we can use Express as the

framework. This framework can do many things. At a bare minimum, it can route

the calls – that is, it interprets any request and maps the request to the

right response. Next, it needs to have libraries that deal with writing the

logic to actually manipulate the data. To present the data, we need a

templating engine.

Any web server that is generating pages

dynamically, needs to depend on an execution engine. It can be an engine that

interprets a language, or executes a binary. For any language, we need a

framework. For instance, if we are using Javascript, we can use Express as the

framework. This framework can do many things. At a bare minimum, it can route

the calls – that is, it interprets any request and maps the request to the

right response. Next, it needs to have libraries that deal with writing the

logic to actually manipulate the data. To present the data, we need a

templating engine.

Of course, you have fancy names like Model (the data), view (the templates etc), and the controller (the routing) for this kind of framework (MVC).

The problem with any stack is that once you are committed to it, it is incredibly difficult to change it. To make matters worse, the frameworks that let you start easily (for example, lot of php based frameworks) are not the best frameworks for enterprise strength requirements (like say Java, which is excellent in libraries that deal with lot of integration). Ask Facebook! They started with Php and had to lot of optimizations to get the performance they want.

How can we do better? Can we still use the static website, and support better usability of the website? Reduce the costs of maintenance? The answer is yes. If we were to separate the content generation and content delivery, we can get best of the both worlds.

From this activity, what did we gain?

- We gained performance: The runtime has to serve static files, giving us several different options to improve the performance.

- We gained simplicity, somewhat: Technically, it is possible to mix and match different systems of generation (which is somewhat like using different languages and compiling down to machine code).

Readers can observe that content delivery networks can do this job of caching generated content on-the-fly, giving us the illusion of static generation of content.

Static website generators

If we are going to generate static website, what options do we have? Let us see the alternatives.

-

Firstly, we can use the same dynamic content generators and dump out the entire site in static pages. That is, if we are using Drupal, we can dump all the nodes. Or, with WordPress, or any other content management site.

The challenge is, what if there is no unique URL for a content? Lot of content management sites offer multiple ways of accessing the static content. For example, Lotus notes was notorious for such URL generation. Then, the static content dumping can be difficult. More over, these systems are not meant for static website generation – the limitations keep showing up as you start relying on them.

-

Secondly, we can use WYSIWYG editors such as Dreamweaver. They can create static content, apply a theme, and generate the website. They come with several beautiful themes, icons, and support scripts as well.

The challenge is that these systems are not programmable. Suppose you are generating content from external system. These systems do not provide a way to automate the content ingestion and upgrading of the website.

-

Thirdly, we can use a programmable system that generates the content. These days, this is the favored approach. These systems generate or update the complete website just from text based files. You can programmatically import content, update the website and publish it to production – all through scripting. Furthermore, they offer standard templating, support for CSS and Javascript libraries.

The downside, of course, is that these systems are not mature. They are not general purpose either. Still, for an experienced programmer, this is a wonderful option.

There are several examples of the third type of generation systems. The most popular ones are the ones that support blogging. For instance, Jekyll is a popular static website generator, written in Ruby, with a blog flavor. The content is authored in markdown format. Octopress is built on Jekyll supporting blogs. In Javascript world, there are blacksmith, docpad, and a few more.

Out of all the contenders, for my use, I like hugo and docpad. Hugo is the simplest of the lot and extremely fast. Docpad is more versatile in supporting lot of different kind of formats, templates, and plugins. In hugo, all that I had to do was to create a hierarchy and drop in .md files as description. Based on the folder structure, it creates the menus, content layout, and the URLs. Docpad is a bit more complex, but it is also essentially the same.

Static web with high interactivity

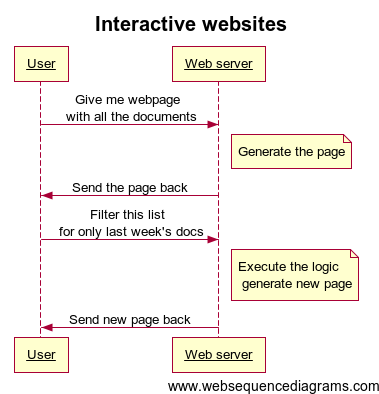

There is a big problem with the earlier approach. Consider the example we were giving about a document management system: what if we want to search for the right document? Or, sort the documents by date? Or, slice and dice the material? Or, draw graphs, based on the keywords?

For all these tasks, historically, we depended on web server doing all the heavy lifting. Do you want to sort the documents? Send a request to the server. Do you want to filter the documents? Send another request.

While this kind of interaction between the user and the web server gets the job done, it is not terribly efficient. It is a burden on the server; it increases bandwidth requirement; it feels sluggish to the user.

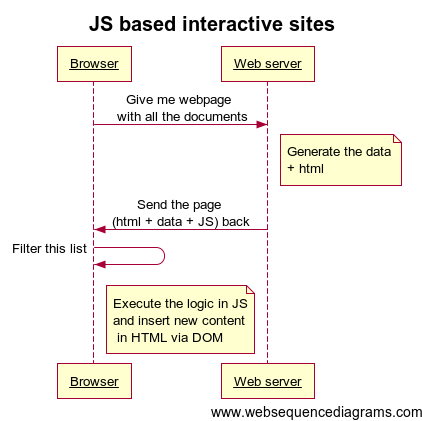

Thankfully, the situation changed with advancement of Javascript. Turns out when any HTML page is digested by the the browser, it creates a data structure called DOM. With Javascript, you can query and change the DOM. That means, at run time, you can do sort, filter, display, hide, animate, draw graphs – all that with the information available to the browser.

With this kind of power, we can design interactive websites without going back to the server. With this additional requirement, how we develop web pages and what technologies we use will be different.

See the sequence diagram titled “JS based interactive sites”. The web server sends the data, html, and javascript the first time. After that, any interaction is handled by the script itself. It can compute the new content based on the user interaction, from the existing data and make the necessary modification to the page by changing the elements of DOM.

The implications of this design option are enormous. We do not need to burden the server; we do not need to overload the network; we provide quick interaction to the customer.

The range of interactions is limitless. For instance, if we are offering an electronic catalogue of books, we can search the titles, sort by authors, filter by publishing date, and so on.

In fact, these kinds of interactions are common enough to have several mature libraries supporting them. For example, for the tasks I mentioned in the previous paragraph, I would use dataTables. If I am doing the same with millions of entries, I would use Pourover by NYTimes (which used this library for their oscar award fashion slicing and dicing web page).

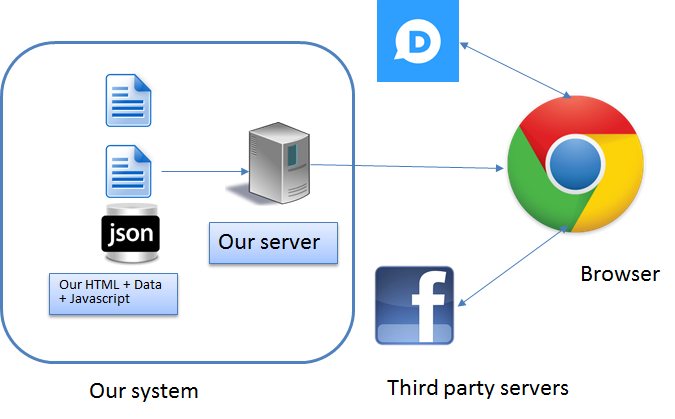

Static web for collaboration

For the kind of interactivity we discussed earlier, Javascript libraries work well. If you think about it, all those web pages are providing is read-only interactivity. What if you want read/write interactivity?

For example, if you have a website with lots of content. You want to provide people a way of adding comments. Take the case of earlier Octopress itself – we may want to add commenting capability to those blog posts. How would we do that? We certainly need server side capability to do that.

Fortunately, the server-side capability need not come from us. We can easily use 3rd party server for these capabilities. For instance, we can use disqus or Facebook for comments. We can use Google analytics to track the users.

In fact, the 3rd party server ecosystem is so strong these days, we can develop highly interactive websites, with just static content served out of our server. You can learn what other leading web companies are using on their web pages and what services they are using from http://stackshare.io/.

For example, if you want to use payment services on your website, what kind of 3rd party service can you use? Look at the choices: http://stackshare.io/payment-services: Stripe, paypal, Braintree, Recurly, Zuora, Chargify, killbill and so many others.

How do you integrate into your website? Here is an example:

<form action="/charge" method="POST">

<script

src="https://checkout.stripe.com/checkout.js" class="stripe-button"

data-key="pk_test_6pRNASCoBOKtIshFeQd4XMUh"

data-image="/img/documentation/checkout/marketplace.png"

data-name="Stripe.com"

data-description="2 widgets"

data-amount="2000">

</script>

</form>This action creates a checkout button. When the user checkouts, it creates a token and provides the browser. You do need to have some server side component that takes this token and charges the user – it goes beyond the usual static website I was describing. But, for most other less critically secure website, you can conduct the updates from the browser itself.

For example, take a look at how you would integrate the analytics from Google on your website:

<!-- Google Analytics -->

<script>

(function(i,s,o,g,r,a,m){i['GoogleAnalyticsObject']=r;i[r]=i[r]||function(){

(i[r].q=i[r].q||[]).push(arguments)},i[r].l=1*new Date();a=s.createElement(o),

m=s.getElementsByTagName(o)[0];a.async=1;a.src=g;m.parentNode.insertBefore(a,m)

})(window,document,'script','//www.google-analytics.com/analytics.js','ga');

ga('create', 'UA-XXXX-Y', 'auto');

ga('send', 'pageview');

</script>

<!-- End Google Analytics -->You find this code in several websites, which posts a click data to the Google server.

Admittedly, most services need server to interact with them, for security purposes. Nevertheless, the heavy lifting is done by some 3rd party web server.

Static website special case: Server less setup

A special case of static website is desktop website. Suppose you want to share a folder with your friends. Easiest way to put the folder in dropbox and share it with them. Now, suppose, you want to provide some interactivity, say, searching the files etc. What would you do? You could host the files in a website. Too much trouble. You could run a local webserver. But, that is too complex for most people. Why not run the site with file:// protocol, without requiring a server, directly opening a file in the browser?

This approach works surprisingly well. The workflow could be as easy as this:

- Let people, including you, place the files in the shared folder.

- Watch the folder on update (or, do it periodically) and run a script that generates the data file.

- The user can open the index.html folder, which uses the data file to create a web page.

- With suitable library (like datatables) the user can navigate, search and sort the files.

This is a simple poor man’s content management service. You can enhance the document authoring process to add all other kind of meta data to files so that you can make more effective slice and dice operations of the folder.

Static web for e-commerce: An exercise for you

Let us take it up one more notch. Can we design an entire e-commerce site as a static website (or, at least with minimal server components)? We want to be able to display the catalogue, let the users browse, discover, and even read/write the comments. In addition, we should let them add items to shopping cart and check them out. We may even want to add recommendations based on what you are looking at.

Now, how many items can we keep in the catalogue? Remember that images are in separate files. We only need the text data. Looking at general information, it is max 2K per item. While there is no limit to the amount of data browser can load, anecdotal evidence suggests that 256MB is a reasonable limit. So, 100,000 items can be displayed in catalogue, without problems. Remember that all this data and images can be served out of a CDN.

We can do one better. We do not have to load all the items at once. We can load parts of items, based on demand. Now, if the commerce site has different divisions, and the customer chose one of them, we only need to load that part.

If we can reduce the number of items to say 10,000 to start with, that makes it less than 20 MB, which is the size of a small video. So, it is entirely reasonable, for user experience perspective, to be able to load 20 MB for a highly interactive site.

What about other services? We can manage the cart in JavaScript. The actual checking out: payment, receipt, and communication to the backend need to be done an actual server. Anything less would make the system less secure. Anybody with knowledge of the JavaScript can easily spoof the browser – so, best not to make direct calls to the backend, from the browser that assumes any valid data from the browser. All you are doing is a providing the integration in the browser!

We can think of some more modifications. What if we design a mobile application? We only need to ship the deltas in the catalogue. After choosing the catalogue, the application can send a request to fulfillment with some additional credit card information.

Now, go ahead do the following tasks:

- Draw the technical architecture diagram

- Create a data model and generate sample data in JSON

- Create a set of javascript programs to support the following activities

- users browsing the catalogue

- Adding items to the cart

- Checking out cart (here, you might some server components – do it an a different server).

- Searching the catalogue

- Managing the cart

- For additional credit, do the following

- Cross-selling and upselling (people who bought what you looked for also bought the following; or, buy this additional item at a discount). Discuss the security implications.

- Develop a mobile application that caches the entire catalogue on the device. Figure out a way to keep the catalogue data synchronized on demand.

Have fun!